Apple boosts inclusivity of its iPhone and iPads for users with new accessibility features

Tech giant Apple has announced that it is bringing new accessibility features to its iPads and iPhones which will cater to a diverse range of user needs. These include the ability to control your device with eye-tracking technology, create custom shortcuts using your voice, experience music with a haptic engine and more.

The company unveiled the announcements ahead of Global Accessibility Awareness Day on Thursday.

For users with physical disabilities, Virtual Trackpad for AssistiveTouch allows users to control their device using a small region of the screen as a resizable trackpad.

Users who are deaf or hard-of-hearing will benefit from “music haptics”, a new feature that allows users to experience the millions of songs in Apple Music through a series of taps, textures and vibrations. It will also be available as an API, so music app developers can soon provide users with a new and accessible way to experience audio.

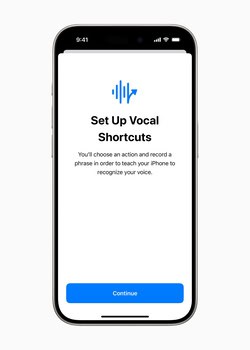

“Vocal shortcuts,” another new useful feature, improves on Apple’s voice-based controls. It lets people assign different sounds or words to launch shortcuts and complete tasks. For instance, Siri will launch an app, even after the user says something as simple as “Ah!”

The company also developed “Listen for Atypical Speech,” which uses machine learning to recognize unique speech patterns, and is designed for users with conditions that affect speech, including cerebral palsy, amyotrophic lateral sclerosis (ALS) and stroke, among others.

Live Speech will include categories and simultaneous compatibility with Live Captions for users who are nonspeaking.

For users who are blind or have low vision, VoiceOver will include new voices, a flexible Voice Rotor, custom volume control, and the ability to customise VoiceOver keyboard shortcuts on Mac.

Braille users will get a new way to start and stay in Braille Screen Input for faster control and text editing. For users with low vision, Hover Typing shows larger text when typing in a text field, and in a user’s preferred font and colour.

Apple has already supported eye-tracking in iOS and iPadOS, but it required the use of additional eye-tracking devices. This is the first time Apple has introduced the ability to control an iPad and iPhone without needing extra hardware or accessories.

The new built-in eye-tracking option allows people to use the front-facing camera to navigate through apps. It leverages AI to understand what the user is looking at and which gesture they want to perform, such as swiping and tapping. There’s also Dwell Control, a feature that can sense when a person’s gaze pauses on an element, indicating they want to select it.

Apple also announced a new feature to help with motion sickness in cars. Instead of looking at stationary content, which can cause motion sickness, users can turn on the “Vehicle Motion Cues” setting. This feature puts animated dots on the edges of the screen that sway and move in the direction of the motion.

CarPlay is getting an update as well, including a “Voice Control” feature; “Color Filters,” which gives colorblind users bold and larger text; and “Sound Recognition” to notify deaf or hard of hearing users when there are car horns and sirens.

Apple also revealed an accessibility feature coming to visionOS, which will enable live captions during FaceTime calls.

Tim Cook, Apple’s CEO, commented: “We believe deeply in the transformative power of innovation to enrich lives. That’s why for nearly 40 years, Apple has championed inclusive design by embedding accessibility at the core of our hardware and software. We’re continuously pushing the boundaries of technology, and these new features reflect our long-standing commitment to delivering the best possible experience to all of our users.”

In December 2023, -commerce giant Amazon announced a new feature, Eye Gaze on Alexa, which supports people with mobility or speech disabilities to use Alexa, Amazon’s virtual assistant technology, with their eyes.